You’ve probably seen a slick cover and thought, nice—until you learn a machine spat it out from someone else’s art, and your stomach does that little sour flip; I’m telling you, it’s messy: models gobble up scans and styles, they remix without asking, and that neat, glossy image on the book? It might be wearing someone else’s creative fingerprints, unpaid and uncredited, which makes you squirm, raises legal alarms, and—if you care about craft—starts to feel wrong, so stick around, I’ll show you why.

Key Takeaways

- AI can imitate living artists’ distinctive styles without permission, raising ethical and moral concerns about credit and income.

- Training datasets often lack transparency, making it hard to verify whether source images were used legally or with consent.

- Automated generation risks producing derivative or infringing designs, triggering copyright disputes and legal uncertainty.

- Publishers and designers face job displacement and shifting skill demands, creating industry tension and unequal opportunities.

- Rapid, low-cost production may prioritize speed over artistic quality and brand consistency, harming reader trust and engagement.

How AI Models Learn From Existing Art

When you feed an AI a pile of art, it doesn’t stare at it like a critic over tea — it munches on pixels and patterns until it can predict the next brushstroke.

You watch as it maps colors, textures, and composition, tasting the mood of a piece like coffee with too much sugar. I point, you nod, the model learns lines and loops, the subtle tilt of an eyebrow, the grain of canvas.

That’s artistic influence — not homage, but data becoming habit. You get creative replication: echoes that feel familiar, not quite the original.

It’s thrilling, a little uncanny, like a student who copies your flourish and improves it. You grin, worry, then play with the results, hungry for the next surprise.

Legal Risks and Copyright Questions

You’re about to step into the legal thicket, and I’ll hold the flashlight while you squint at the signs.

Who actually owns that source image, what was fed into the training dataset, and whether your shiny cover counts as a derivative work — those questions hit like a cold splash of reality.

Let’s sort the ownership claims, demand dataset transparency, and brace for disputes, before your book hits the shelves or a lawyer’s inbox.

Source Image Ownership

If you’ve ever flipped through a stack of draft covers and wondered who actually owns that grainy beach photo or the vintage portrait the AI just mashed up, I’ve got news: it’s messy, and it won’t tidy itself.

I poke around the edges of image rights, and you get tug-of-war drama. You want to innovate, but someone else might claim the pixels. I squint at metadata, sniff out provenance, then breathe out and face the risk: ownership disputes can derail a launch.

- Track sources early, like breadcrumbs you’ll actually follow.

- Get licenses in writing, don’t rely on polite nods.

- Consider replacements before a takedown notice wakes you.

Stay bold, but don’t be reckless.

Training Dataset Transparency

Because the models learned from other people’s work, you should care about what went into them — and so do your readers, lawyers, and that one scowling rights manager in the corner.

I’ll be blunt: if you want to innovate, you’ve got to demand raw details. Ask for dataset diversity numbers, source lists, and the provenance trail, like a detective tracing fingerprints.

Push for transparency standards that are specific, not corporate fluff. You’ll sleep better knowing a model wasn’t fed a library of questionable images.

Tell your team to document, tag, and log every feed, every scrape, every license. It’s tedious, sure, but it’s also practical risk management.

Do the paperwork now, ship bold covers tomorrow.

Derivative Work Disputes

You should want to know what went into a model, and not just for your conscience — you’ll also want protection when someone starts yelling “infringement” across the room.

I tell you this because derivative work disputes hit fast, like a coffee spill on a manuscript. You want innovation, sure, but also respect for creative originality and artistic integrity.

I’ll walk you through the sticky parts, and we’ll laugh nervously.

- Who owns the image the model echoed, and did it copy or inspire?

- Can you prove the prompt, the process, and the edits you made?

- What licenses, credits, or indemnities will actually shield you?

Keep records, get smart counsel, and don’t assume novelty equals safety.

The Impact on Professional Cover Designers

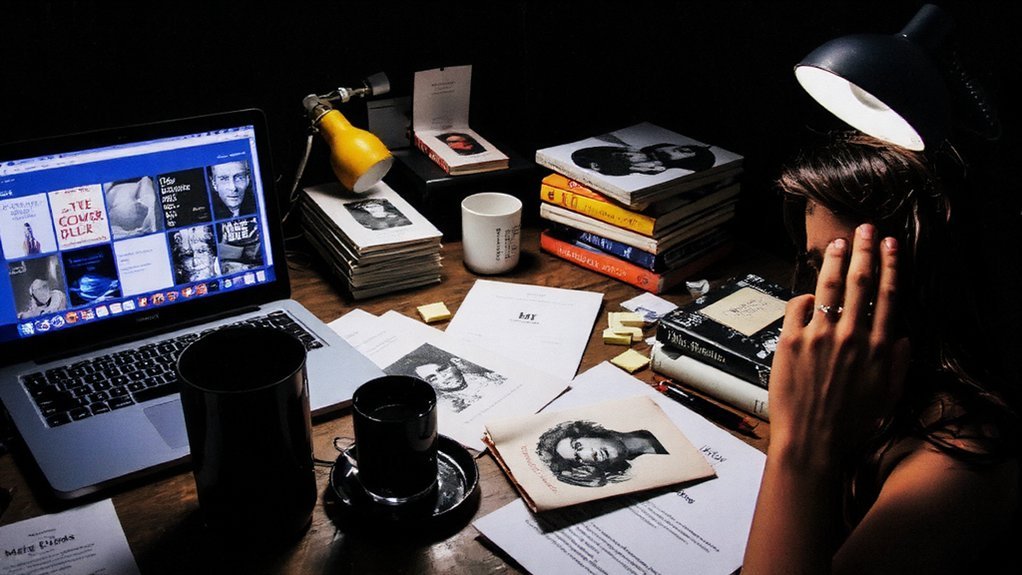

When I first saw an AI spit out a bestselling-looking cover in thirty seconds, I laughed—then my heart did a weird little skip. You watch your trade shift, fingers stained with paint or coffee, and wonder where you fit.

Creative disruption hums in the studio; design standards get questioned overnight. You face freelance challenges, lower bids, clients asking for “just an AI mock.” Employment impact bites—jobs reshape, some vanish, others morph.

You defend artistic integrity, sketching by hand, refusing templates that feel dead. Quality concerns pop up, obvious to your trained eye, but some publishers don’t notice until books sit unread.

Your adaptation isn’t passive; you learn prompts, set boundaries, evolve with the industry, still stubborn, still proud.

Ethical Concerns Around Style Mimicry

If you’ve spent nights sketching a spine until your hand cramped, you’ll feel the sting: AI can mimic a living artist’s style so closely it’s like watching someone photocopy your signature. You notice brushstrokes you taught yourself, colors you hoard, a quirky curve — gone, reproduced without credit.

You want bold change, but you also want respect. This is about style appropriation, and it hits artistic integrity where it counts.

- You recognize your fingerprint in a generated cover, and it’s unnerving.

- You want tools that elevate, not erase, your craft.

- You crave clear permission, attribution, and a fair remix culture.

I’m blunt, but hopeful: innovation shouldn’t steal the soul of the maker.

Market Effects on Reader Perception and Sales

Because a cover is the handshake before the conversation, I’ll tell you straight: covers made by AI change how readers judge a book before they read a single line. You notice visual appeal first, you feel an emotional response, and you decide in seconds.

Smart creators watch market trends and tweak cover design to match genre expectations, because reader engagement rides on that instant spark. You’ll test branding strategies, align with consumer preferences, and measure sales performance like a lab scientist with better coffee.

Sometimes AI nails a vibe, sometimes it feels off — you laugh, you tweak, you learn. Those tiny shifts steer purchase decisions, nudge browsing thumbs, and reshape how whole audiences sense a story’s promise.

Publisher and Author Adoption Dilemmas

Since publishers and authors are still squinting at AI-generated covers like they’re reading a weird first draft, I plunge right into the awkward choices you didn’t ask for but now must make.

You want novelty, but you also want control, and that tension smells like sizzling opportunity. I watch you negotiate author perspectives and publisher strategies, fingers hovering over click-to-accept or burn-the-mockup.

- Will you trust a machine’s aesthetic gut, or insist on human nuance?

- Do you prioritize speed-to-market, or protect brand voice and royalties?

- Who signs off when a generated image echoes someone’s likeness?

You lean forward, tasting both risk and thrill. I nudge you: experiment boldly, yes, but keep contracts tight, workflows clear, and your good sense sharper than any filter.

Possible Paths for Responsible Use and Regulation

Okay, you’ve wrestled with the ethics, the contracts, the weird art-director stare-off where a publisher and an author both suspect the AI of being smug.

I’ll walk you through practical paths, with a grin and a clipboard. You can push for clear regulatory frameworks, that mandate disclosure, provenance tags, and fair-use audits.

You can build studio routines that preserve creative integrity: human-led concepting, iterative prompts, and signed credits.

Try pilot programs, public feedback loops, and licensing pools that pay artists, yes, even the grumpy ones.

Imagine tactile proofs, paper in hand, fingers tracing a spine while you decide.

Start small, measure impact, then scale. You’ll protect creators, embrace innovation, and still sleep at night.

Leave a Reply